Estimated reading time: 31 minutes

This document covers recommended best practices and methods for buildingefficient images.

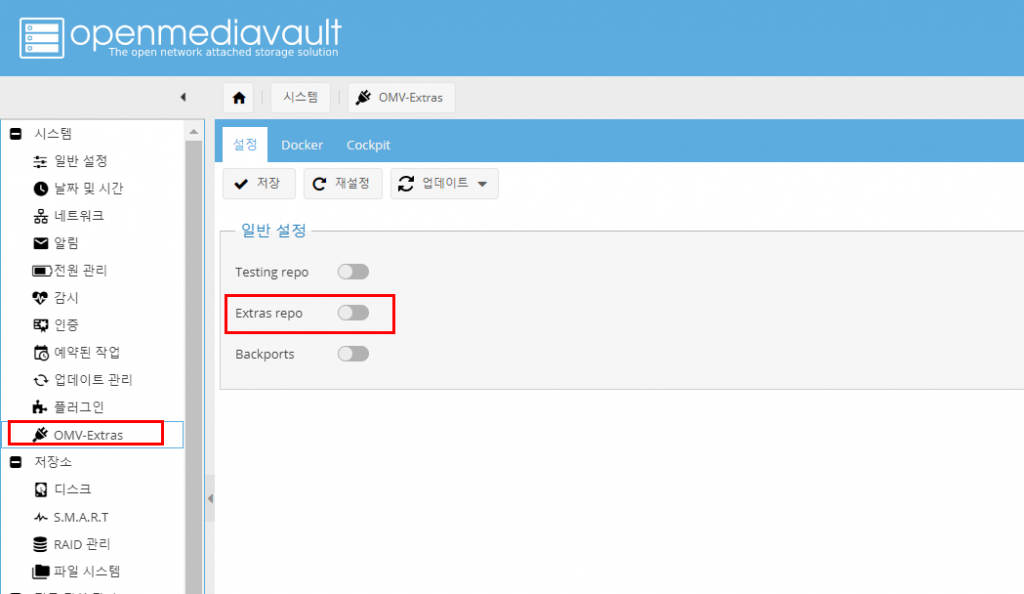

Feb 11, 2021 Build a TensorFlow pip package from source and install it on Ubuntu Linux and macOS. While the instructions might work for other systems, it is only tested and supported for Ubuntu and macOS. Install Docker using the Ubuntu online guide or these instructions: sudo apt-get update sudo apt-get install wget wget-qO-https: // get.

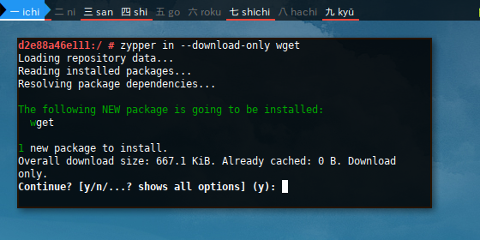

- In this article, you will learn how to install wget non-interactive network downloader in Linux. Wget is a tool developed by the GNU project used for retrieving or downloading files from web or FTP servers.

- Docker 安装 Tomcat 方法一、docker pull tomcat 查找 Docker Hub 上的 Tomcat 镜像: 可以通过 Sort by 查看其他版本的 tomcat,默认是最新版本 tomcat:latest。 此外,我们还可以用 docker search tomcat 命令来查看可用版本: runoob@runoob:/tomcat$ docker search tomcat NAME.

Docker builds images automatically by reading the instructions from aDockerfile -- a text file that contains all commands, in order, needed tobuild a given image. A Dockerfile adheres to a specific format and set ofinstructions which you can find at Dockerfile reference.

A Docker image consists of read-only layers each of which represents aDockerfile instruction. The layers are stacked and each one is a delta of thechanges from the previous layer. Consider this Dockerfile:

Each instruction creates one layer:

FROMcreates a layer from theubuntu:18.04Docker image.COPYadds files from your Docker client’s current directory.RUNbuilds your application withmake.CMDspecifies what command to run within the container.

When you run an image and generate a container, you add a new writable layer(the “container layer”) on top of the underlying layers. All changes made tothe running container, such as writing new files, modifying existing files, anddeleting files, are written to this thin writable container layer.

For more on image layers (and how Docker builds and stores images), seeAbout storage drivers.

General guidelines and recommendations

Create ephemeral containers

The image defined by your Dockerfile should generate containers that are asephemeral as possible. By “ephemeral”, we mean that the container can be stoppedand destroyed, then rebuilt and replaced with an absolute minimum set up andconfiguration.

Refer to Processes under The Twelve-factor Appmethodology to get a feel for the motivations of running containers in such astateless fashion.

Understand build context

When you issue a docker build command, the current working directory is calledthe build context. By default, the Dockerfile is assumed to be located here,but you can specify a different location with the file flag (-f). Regardlessof where the Dockerfile actually lives, all recursive contents of files anddirectories in the current directory are sent to the Docker daemon as the buildcontext.

Build context example

Create a directory for the build context and cd into it. Write “hello” intoa text file named hello and create a Dockerfile that runs cat on it. Buildthe image from within the build context (.):

Move Dockerfile and hello into separate directories and build a secondversion of the image (without relying on cache from the last build). Use -fto point to the Dockerfile and specify the directory of the build context:

Inadvertently including files that are not necessary for building an imageresults in a larger build context and larger image size. This can increase thetime to build the image, time to pull and push it, and the container runtimesize. To see how big your build context is, look for a message like this whenbuilding your Dockerfile:

Pipe Dockerfile through stdin

Docker has the ability to build images by piping Dockerfile through stdinwith a local or remote build context. Piping a Dockerfile through stdincan be useful to perform one-off builds without writing a Dockerfile to disk,or in situations where the Dockerfile is generated, and should not persistafterwards.

The examples in this section use here documentsfor convenience, but any method to provide the Dockerfile on stdin can beused.

For example, the following commands are equivalent:

You can substitute the examples with your preferred approach, or the approachthat best fits your use-case.

Build an image using a Dockerfile from stdin, without sending build context

Use this syntax to build an image using a Dockerfile from stdin, withoutsending additional files as build context. The hyphen (-) takes the positionof the PATH, and instructs Docker to read the build context (which onlycontains a Dockerfile) from stdin instead of a directory:

The following example builds an image using a Dockerfile that is passed throughstdin. No files are sent as build context to the daemon.

Omitting the build context can be useful in situations where your Dockerfiledoes not require files to be copied into the image, and improves the build-speed,as no files are sent to the daemon.

If you want to improve the build-speed by excluding some files from the build-context, refer to exclude with .dockerignore.

Note: Attempting to build a Dockerfile that uses COPY or ADD will failif this syntax is used. The following example illustrates this:

Build from a local build context, using a Dockerfile from stdin

Use this syntax to build an image using files on your local filesystem, but usinga Dockerfile from stdin. The syntax uses the -f (or --file) option tospecify the Dockerfile to use, using a hyphen (-) as filename to instructDocker to read the Dockerfile from stdin:

The example below uses the current directory (.) as the build context, and buildsan image using a Dockerfile that is passed through stdin using a heredocument.

Build from a remote build context, using a Dockerfile from stdin

Use this syntax to build an image using files from a remote git repository, using a Dockerfile from stdin. The syntax uses the -f (or --file) option tospecify the Dockerfile to use, using a hyphen (-) as filename to instructDocker to read the Dockerfile from stdin:

This syntax can be useful in situations where you want to build an image from arepository that does not contain a Dockerfile, or if you want to build with a customDockerfile, without maintaining your own fork of the repository.

The example below builds an image using a Dockerfile from stdin, and addsthe hello.c file from the “hello-world” Git repository on GitHub.

Under the hood

When building an image using a remote Git repository as build context, Docker performs a git clone of the repository on the local machine, and sendsthose files as build context to the daemon. This feature requires git to beinstalled on the host where you run the docker build command.

Exclude with .dockerignore

To exclude files not relevant to the build (without restructuring your sourcerepository) use a .dockerignore file. This file supports exclusion patternssimilar to .gitignore files. For information on creating one, see the.dockerignore file.

Use multi-stage builds

Multi-stage builds allow you to drastically reduce thesize of your final image, without struggling to reduce the number of intermediatelayers and files.

Because an image is built during the final stage of the build process, you canminimize image layers by leveraging build cache.

For example, if your build contains several layers, you can order them from theless frequently changed (to ensure the build cache is reusable) to the morefrequently changed:

Install tools you need to build your application

Install or update library dependencies

Generate your application

A Dockerfile for a Go application could look like:

Don’t install unnecessary packages

To reduce complexity, dependencies, file sizes, and build times, avoidinstalling extra or unnecessary packages just because they might be “nice tohave.” For example, you don’t need to include a text editor in a database image.

Decouple applications

Each container should have only one concern. Decoupling applications intomultiple containers makes it easier to scale horizontally and reuse containers.For instance, a web application stack might consist of three separatecontainers, each with its own unique image, to manage the web application,database, and an in-memory cache in a decoupled manner.

Limiting each container to one process is a good rule of thumb, but it is not ahard and fast rule. For example, not only can containers bespawned with an init process,some programs might spawn additional processes of their own accord. Forinstance, Celery can spawn multiple workerprocesses, and Apache can create one process perrequest.

Use your best judgment to keep containers as clean and modular as possible. Ifcontainers depend on each other, you can use Docker container networksto ensure that these containers can communicate.

Minimize the number of layers

In older versions of Docker, it was important that you minimized the number oflayers in your images to ensure they were performant. The following featureswere added to reduce this limitation:

Only the instructions

RUN,COPY,ADDcreate layers. Other instructionscreate temporary intermediate images, and do not increase the size of the build.Where possible, use multi-stage builds, and only copythe artifacts you need into the final image. This allows you to include toolsand debug information in your intermediate build stages without increasing thesize of the final image.

Sort multi-line arguments

Whenever possible, ease later changes by sorting multi-line argumentsalphanumerically. This helps to avoid duplication of packages and make thelist much easier to update. This also makes PRs a lot easier to read andreview. Adding a space before a backslash () helps as well.

Here’s an example from the buildpack-deps image:

Leverage build cache

When building an image, Docker steps through the instructions in yourDockerfile, executing each in the order specified. As each instruction isexamined, Docker looks for an existing image in its cache that it can reuse,rather than creating a new (duplicate) image.

If you do not want to use the cache at all, you can use the --no-cache=trueoption on the docker build command. However, if you do let Docker use itscache, it is important to understand when it can, and cannot, find a matchingimage. The basic rules that Docker follows are outlined below:

Starting with a parent image that is already in the cache, the nextinstruction is compared against all child images derived from that baseimage to see if one of them was built using the exact same instruction. Ifnot, the cache is invalidated.

In most cases, simply comparing the instruction in the

Dockerfilewith oneof the child images is sufficient. However, certain instructions require moreexamination and explanation.For the

ADDandCOPYinstructions, the contents of the file(s)in the image are examined and a checksum is calculated for each file.The last-modified and last-accessed times of the file(s) are not considered inthese checksums. During the cache lookup, the checksum is compared against thechecksum in the existing images. If anything has changed in the file(s), suchas the contents and metadata, then the cache is invalidated.Aside from the

ADDandCOPYcommands, cache checking does not look at thefiles in the container to determine a cache match. For example, when processingaRUN apt-get -y updatecommand the files updated in the containerare not examined to determine if a cache hit exists. In that case justthe command string itself is used to find a match.

Once the cache is invalidated, all subsequent Dockerfile commands generate newimages and the cache is not used.

Dockerfile instructions

These recommendations are designed to help you create an efficient andmaintainable Dockerfile.

FROM

Whenever possible, use current official images as the basis for yourimages. We recommend the Alpine image as itis tightly controlled and small in size (currently under 5 MB), while stillbeing a full Linux distribution.

LABEL

You can add labels to your image to help organize images by project, recordlicensing information, to aid in automation, or for other reasons. For eachlabel, add a line beginning with LABEL and with one or more key-value pairs.The following examples show the different acceptable formats. Explanatory comments are included inline.

Strings with spaces must be quoted or the spaces must be escaped. Innerquote characters ('), must also be escaped.

An image can have more than one label. Prior to Docker 1.10, it was recommendedto combine all labels into a single LABEL instruction, to prevent extra layersfrom being created. This is no longer necessary, but combining labels is stillsupported.

The above can also be written as:

See Understanding object labelsfor guidelines about acceptable label keys and values. For information aboutquerying labels, refer to the items related to filtering inManaging labels on objects.See also LABEL in the Dockerfile reference.

RUN

Split long or complex RUN statements on multiple lines separated withbackslashes to make your Dockerfile more readable, understandable, andmaintainable.

apt-get

Probably the most common use-case for RUN is an application of apt-get.Because it installs packages, the RUN apt-get command has several gotchas tolook out for.

Always combine RUN apt-get update with apt-get install in the same RUNstatement. For example:

Using apt-get update alone in a RUN statement causes caching issues andsubsequent apt-get install instructions fail. For example, say you have aDockerfile:

After building the image, all layers are in the Docker cache. Suppose you latermodify apt-get install by adding extra package:

Docker sees the initial and modified instructions as identical and reuses thecache from previous steps. As a result the apt-get update is not executedbecause the build uses the cached version. Because the apt-get update is notrun, your build can potentially get an outdated version of the curl andnginx packages.

Using RUN apt-get update && apt-get install -y ensures your Dockerfileinstalls the latest package versions with no further coding or manualintervention. This technique is known as “cache busting”. You can also achievecache-busting by specifying a package version. This is known as version pinning,for example:

Version pinning forces the build to retrieve a particular version regardless ofwhat’s in the cache. This technique can also reduce failures due to unanticipated changesin required packages.

Below is a well-formed RUN instruction that demonstrates all the apt-getrecommendations.

The s3cmd argument specifies a version 1.1.*. If the image previouslyused an older version, specifying the new one causes a cache bust of apt-getupdate and ensures the installation of the new version. Listing packages oneach line can also prevent mistakes in package duplication.

In addition, when you clean up the apt cache by removing /var/lib/apt/lists itreduces the image size, since the apt cache is not stored in a layer. Since theRUN statement starts with apt-get update, the package cache is alwaysrefreshed prior to apt-get install.

Official Debian and Ubuntu images automatically run apt-get clean,so explicit invocation is not required.

Using pipes

Some RUN commands depend on the ability to pipe the output of one command into another, using the pipe character (|), as in the following example:

Docker executes these commands using the /bin/sh -c interpreter, which onlyevaluates the exit code of the last operation in the pipe to determine success.In the example above this build step succeeds and produces a new image so longas the wc -l command succeeds, even if the wget command fails.

If you want the command to fail due to an error at any stage in the pipe,prepend set -o pipefail && to ensure that an unexpected error prevents thebuild from inadvertently succeeding. For example:

Not all shells support the -o pipefail option.

In cases such as the dash shell onDebian-based images, consider using the exec form of RUN to explicitlychoose a shell that does support the pipefail option. For example:

CMD

The CMD instruction should be used to run the software contained in yourimage, along with any arguments. CMD should almost always be used in the formof CMD ['executable', 'param1', 'param2'…]. Thus, if the image is for aservice, such as Apache and Rails, you would run something like CMD['apache2','-DFOREGROUND']. Indeed, this form of the instruction is recommendedfor any service-based image.

In most other cases, CMD should be given an interactive shell, such as bash,python and perl. For example, CMD ['perl', '-de0'], CMD ['python'], or CMD['php', '-a']. Using this form means that when you execute something likedocker run -it python, you’ll get dropped into a usable shell, ready to go.CMD should rarely be used in the manner of CMD ['param', 'param'] inconjunction with ENTRYPOINT, unlessyou and your expected users are already quite familiar with how ENTRYPOINTworks.

EXPOSE

The EXPOSE instruction indicates the ports on which a container listensfor connections. Consequently, you should use the common, traditional port foryour application. For example, an image containing the Apache web server woulduse EXPOSE 80, while an image containing MongoDB would use EXPOSE 27017 andso on.

For external access, your users can execute docker run with a flag indicatinghow to map the specified port to the port of their choice.For container linking, Docker provides environment variables for the path fromthe recipient container back to the source (ie, MYSQL_PORT_3306_TCP).

Docker Install Wget Command

ENV

To make new software easier to run, you can use ENV to update thePATH environment variable for the software your container installs. Forexample, ENV PATH=/usr/local/nginx/bin:$PATH ensures that CMD ['nginx']just works.

The ENV instruction is also useful for providing required environmentvariables specific to services you wish to containerize, such as Postgres’sPGDATA.

Lastly, ENV can also be used to set commonly used version numbers so thatversion bumps are easier to maintain, as seen in the following example:

Similar to having constant variables in a program (as opposed to hard-codingvalues), this approach lets you change a single ENV instruction toauto-magically bump the version of the software in your container.

Each ENV line creates a new intermediate layer, just like RUN commands. Thismeans that even if you unset the environment variable in a future layer, itstill persists in this layer and its value can be dumped. You can test this bycreating a Dockerfile like the following, and then building it.

To prevent this, and really unset the environment variable, use a RUN commandwith shell commands, to set, use, and unset the variable all in a single layer.You can separate your commands with ; or &&. If you use the second method,and one of the commands fails, the docker build also fails. This is usually agood idea. Using as a line continuation character for Linux Dockerfilesimproves readability. You could also put all of the commands into a shell scriptand have the RUN command just run that shell script.

ADD or COPY

Although ADD and COPY are functionally similar, generally speaking, COPYis preferred. That’s because it’s more transparent than ADD. COPY onlysupports the basic copying of local files into the container, while ADD hassome features (like local-only tar extraction and remote URL support) that arenot immediately obvious. Consequently, the best use for ADD is local tar fileauto-extraction into the image, as in ADD rootfs.tar.xz /.

If you have multiple Dockerfile steps that use different files from yourcontext, COPY them individually, rather than all at once. This ensures thateach step’s build cache is only invalidated (forcing the step to be re-run) ifthe specifically required files change.

Docker Alpine Install Wget

For example:

Results in fewer cache invalidations for the RUN step, than if you put theCOPY . /tmp/ before it.

Because image size matters, using ADD to fetch packages from remote URLs isstrongly discouraged; you should use curl or wget instead. That way you candelete the files you no longer need after they’ve been extracted and you don’thave to add another layer in your image. For example, you should avoid doingthings like:

And instead, do something like:

For other items (files, directories) that do not require ADD’s tarauto-extraction capability, you should always use COPY.

ENTRYPOINT

The best use for ENTRYPOINT is to set the image’s main command, allowing thatimage to be run as though it was that command (and then use CMD as thedefault flags).

Let’s start with an example of an image for the command line tool s3cmd:

Now the image can be run like this to show the command’s help:

Or using the right parameters to execute a command:

This is useful because the image name can double as a reference to the binary asshown in the command above.

The ENTRYPOINT instruction can also be used in combination with a helperscript, allowing it to function in a similar way to the command above, evenwhen starting the tool may require more than one step.

For example, the Postgres Official Imageuses the following script as its ENTRYPOINT:

Configure app as PID 1

This script uses the exec Bash commandso that the final running application becomes the container’s PID 1. Thisallows the application to receive any Unix signals sent to the container.For more, see the ENTRYPOINT reference.

The helper script is copied into the container and run via ENTRYPOINT oncontainer start:

This script allows the user to interact with Postgres in several ways.

It can simply start Postgres:

Or, it can be used to run Postgres and pass parameters to the server:

Lastly, it could also be used to start a totally different tool, such as Bash:

VOLUME

The VOLUME instruction should be used to expose any database storage area,configuration storage, or files/folders created by your docker container. Youare strongly encouraged to use VOLUME for any mutable and/or user-serviceableparts of your image.

Docker Install Linux

USER

If a service can run without privileges, use USER to change to a non-rootuser. Start by creating the user and group in the Dockerfile with somethinglike RUN groupadd -r postgres && useradd --no-log-init -r -g postgres postgres.

Consider an explicit UID/GID

Users and groups in an image are assigned a non-deterministic UID/GID in thatthe “next” UID/GID is assigned regardless of image rebuilds. So, if it’scritical, you should assign an explicit UID/GID.

Due to an unresolved bug in theGo archive/tar package’s handling of sparse files, attempting to create a userwith a significantly large UID inside a Docker container can lead to diskexhaustion because /var/log/faillog in the container layer is filled withNULL (0) characters. A workaround is to pass the --no-log-init flag touseradd. The Debian/Ubuntu adduser wrapper does not support this flag.

Avoid installing or using sudo as it has unpredictable TTY andsignal-forwarding behavior that can cause problems. If you absolutely needfunctionality similar to sudo, such as initializing the daemon as root butrunning it as non-root, consider using “gosu”.

Lastly, to reduce layers and complexity, avoid switching USER back and forthfrequently.

WORKDIR

For clarity and reliability, you should always use absolute paths for yourWORKDIR. Also, you should use WORKDIR instead of proliferating instructionslike RUN cd … && do-something, which are hard to read, troubleshoot, andmaintain.

ONBUILD

An ONBUILD command executes after the current Dockerfile build completes.ONBUILD executes in any child image derived FROM the current image. Thinkof the ONBUILD command as an instruction the parent Dockerfile givesto the child Dockerfile.

A Docker build executes ONBUILD commands before any command in a childDockerfile.

ONBUILD is useful for images that are going to be built FROM a givenimage. For example, you would use ONBUILD for a language stack image thatbuilds arbitrary user software written in that language within theDockerfile, as you can see in Ruby’s ONBUILD variants.

Images built with ONBUILD should get a separate tag, for example:ruby:1.9-onbuild or ruby:2.0-onbuild.

Be careful when putting ADD or COPY in ONBUILD. The “onbuild” imagefails catastrophically if the new build’s context is missing the resource beingadded. Adding a separate tag, as recommended above, helps mitigate this byallowing the Dockerfile author to make a choice.

Examples for Official Images

Docker Centos Install Wget

These Official Images have exemplary Dockerfiles:

Docker Hub

Additional resources:

parent image, images, dockerfile, best practices, hub, official image